Markus Worchel

Links:

Personal website

Publications

Interpolating splines over triangulated surfaces by blending vertex-centric local geometries (2025)

Computers & Graphics

Interpolating splines over triangulated surfaces by blending vertex-centric local geometries (2025)

Computers & Graphics

@article{DJUREN2025104316,

title = {Interpolating splines over triangulated surfaces by blending vertex-centric local geometries},

journal = {Computers & Graphics},

volume = {131},

pages = {104316},

year = {2025},

issn = {0097-8493},

doi = {https://doi.org/10.1016/j.cag.2025.104316},

url = {https://www.sciencedirect.com/science/article/pii/S0097849325001578},

author = {Tobias Djuren and Ugo Finnendahl and Maximilian Kohlbrenner and Markus Worchel and Marc Alexa},

keywords = {Rational interpolation, Splines, Local control},

abstract = {We investigate the construction of visually smooth spline surfaces that interpolate the vertices of triangulations by blending local patches. Each triangle star carries a locally interpolating surface patch. The patches are only required to interpolate the vertex, whereas in previous methods the patches are often defined per edge, imposing multiple constraints on local approximations. We adopt simple rational blend functions for the triangular domains, that are constructed so that they retain the interpolation and tangent behavior on the patch boundaries. Decoupling local approximation from blending facilitates the exploration of visually pleasing constructions, while controlling the complexity.}

}

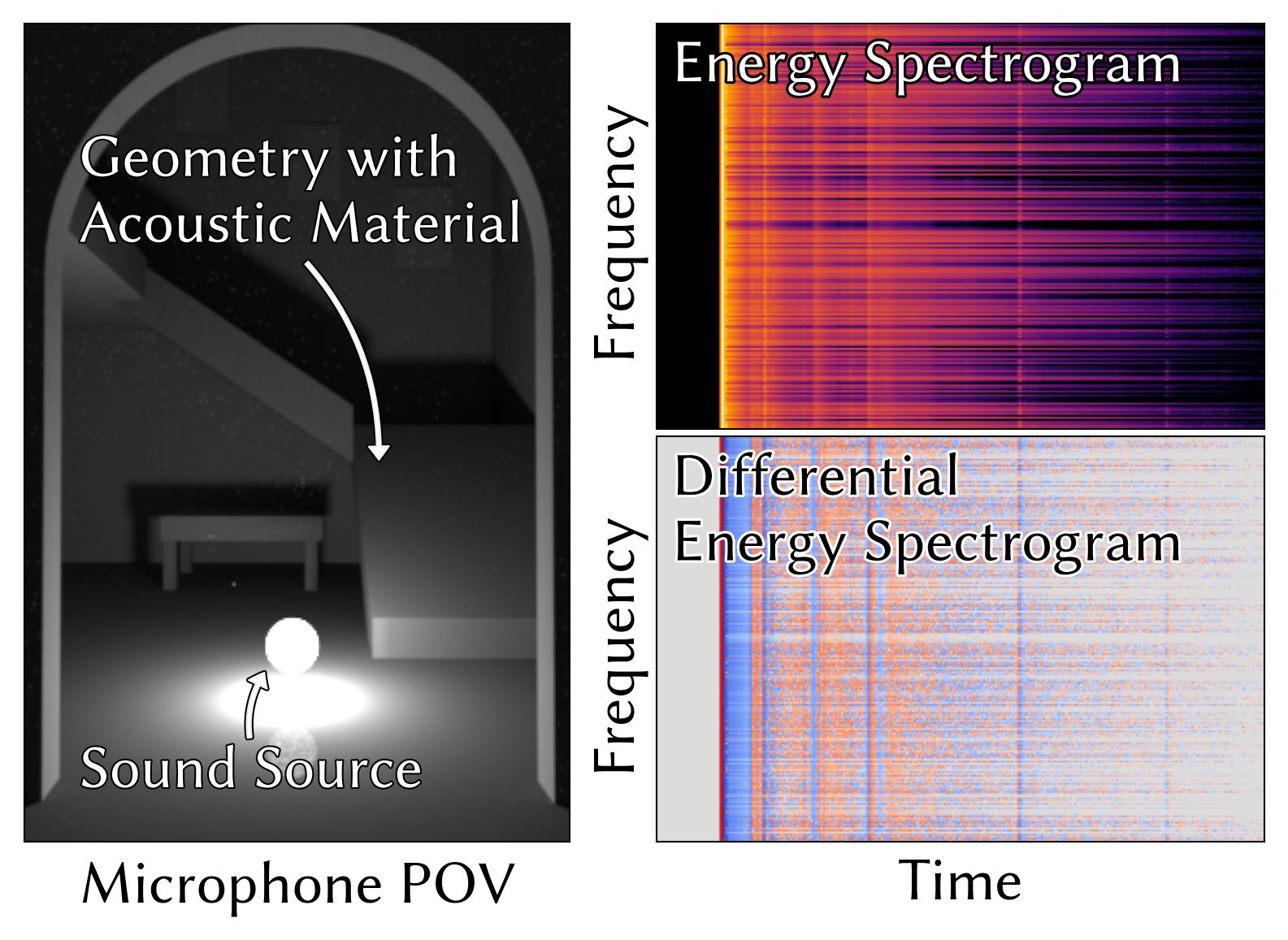

Differentiable Geometric Acoustic Path Tracing using Time-Resolved Path Replay Backpropagation (2025)

ACM Transactions on Graphics (Proc. of SIGGRAPH)

Differentiable Geometric Acoustic Path Tracing using Time-Resolved Path Replay Backpropagation (2025)

ACM Transactions on Graphics (Proc. of SIGGRAPH)

@article{finnendahl:2025:diffacousticpt,

author = {Finnendahl, Ugo and Worchel, Markus and J\"{u}terbock, Tobias and Wujecki, Daniel and Brinkmann, Fabian and Weinzierl, Stefan and Alexa, Marc},

title = {Differentiable Geometric Acoustic Path Tracing using Time-Resolved Path Replay Backpropagation},

year = {2025},

issue_date = {August 2025},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {44},

number = {4},

issn = {0730-0301},

url = {https://doi.org/10.1145/3730900},

doi = {10.1145/3730900},

journal = {ACM Trans. Graph.},

month = jul,

articleno = {82},

numpages = {17},

keywords = {differentiable rendering, geometrical acoustics, physically-based simulation, acoustic optimization}

}

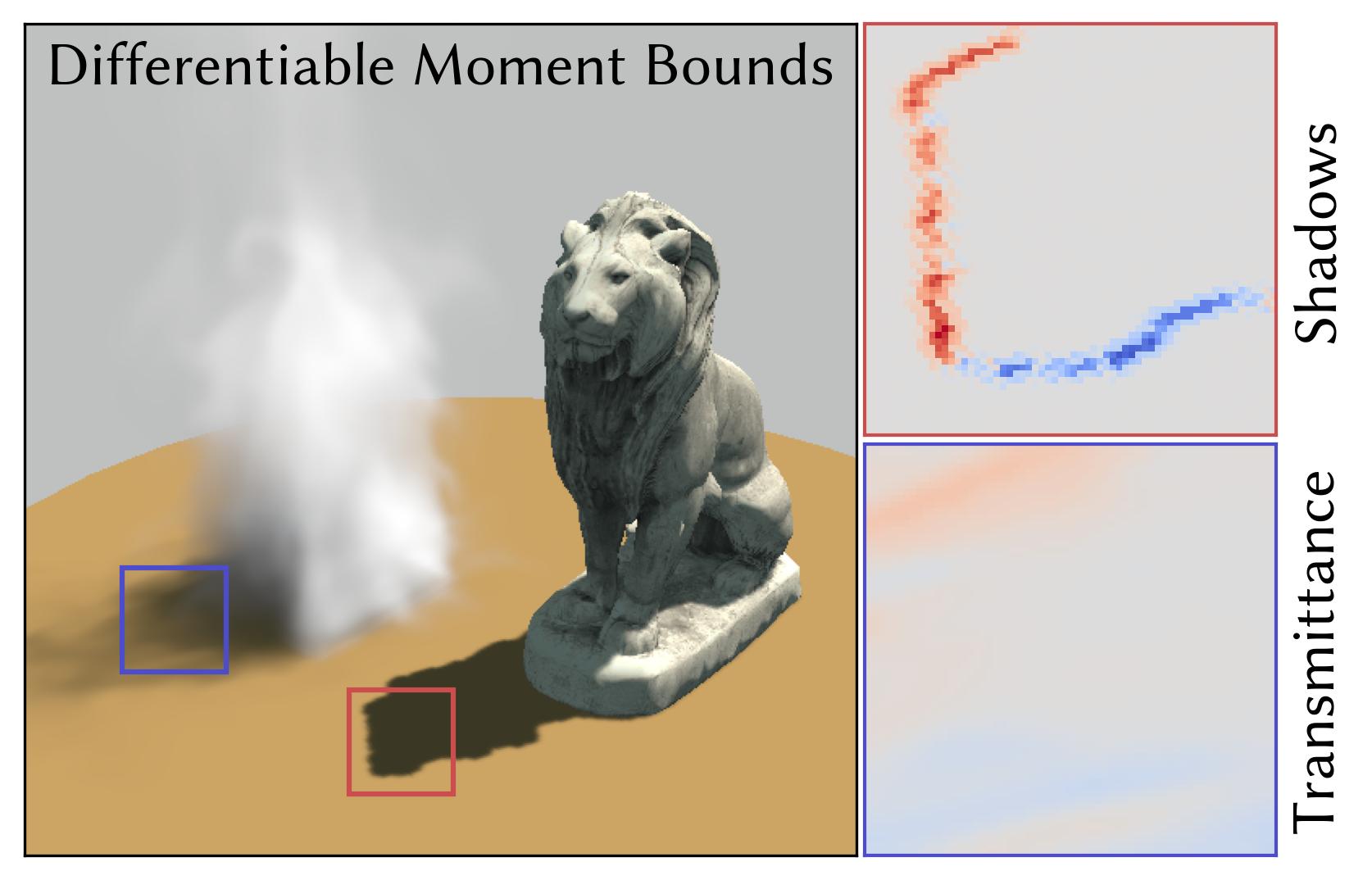

Moment Bounds are Differentiable: Efficiently Approximating Measures in Inverse Rendering (2025)

ACM Transactions on Graphics (Proc. of SIGGRAPH)

Moment Bounds are Differentiable: Efficiently Approximating Measures in Inverse Rendering (2025)

ACM Transactions on Graphics (Proc. of SIGGRAPH)

@article{worchel:2025:diffmoments,

author = {Worchel, Markus and Alexa, Marc},

title = {Moment Bounds are Differentiable: Efficiently Approximating Measures in Inverse Rendering},

year = {2025},

issue_date = {August 2025},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {44},

number = {4},

issn = {0730-0301},

url = {https://doi.org/10.1145/3730899},

doi = {10.1145/3730899},

journal = {ACM Trans. Graph.},

month = jul,

articleno = {80},

numpages = {21},

keywords = {differentiable rendering, shadows}

}

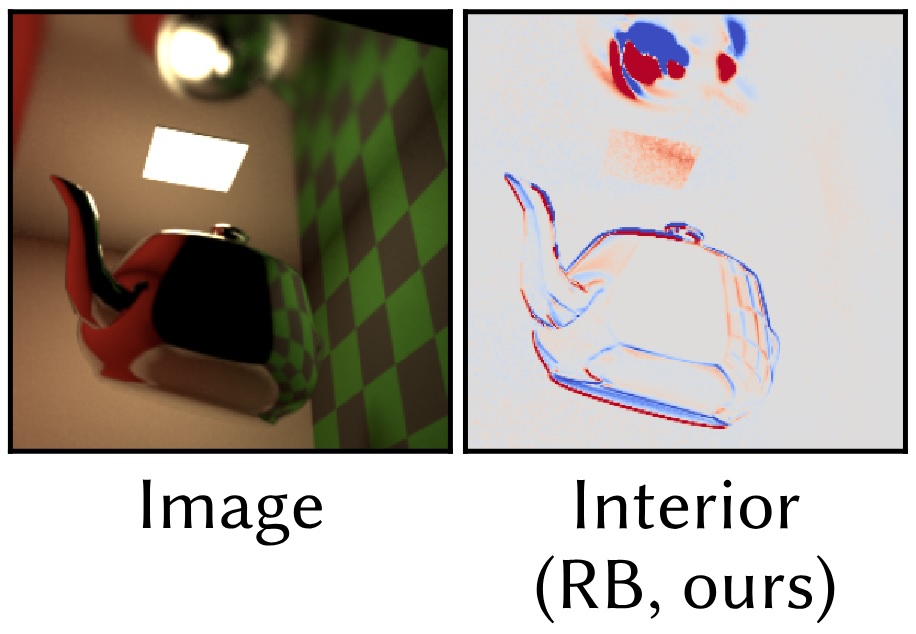

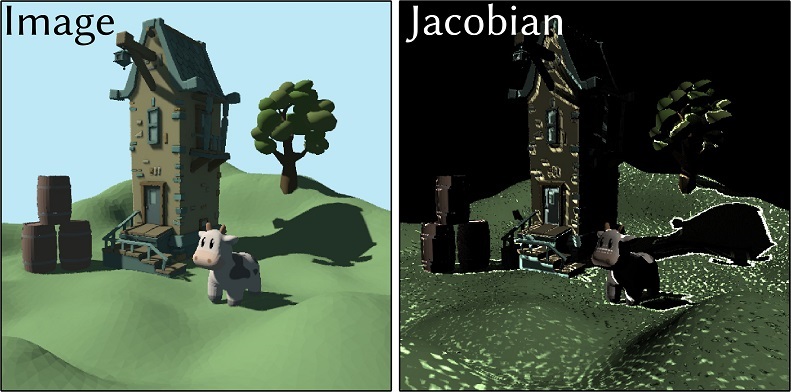

Radiative Backpropagation with Non-Static Geometry (2025)

Eurographics Symposium on Rendering (EGSR)

Radiative Backpropagation with Non-Static Geometry (2025)

Eurographics Symposium on Rendering (EGSR)

@inproceedings{10.2312:sr.20251198,

booktitle = {Eurographics Symposium on Rendering},

editor = {Wang, Beibei and Wilkie, Alexander},

title = {{Radiative Backpropagation with Non-Static Geometry}},

author = {Worchel, Markus and Finnendahl, Ugo and Alexa, Marc},

year = {2025},

publisher = {The Eurographics Association},

ISSN = {1727-3463},

ISBN = {978-3-03868-292-9},

DOI = {10.2312/sr.20251198}

}

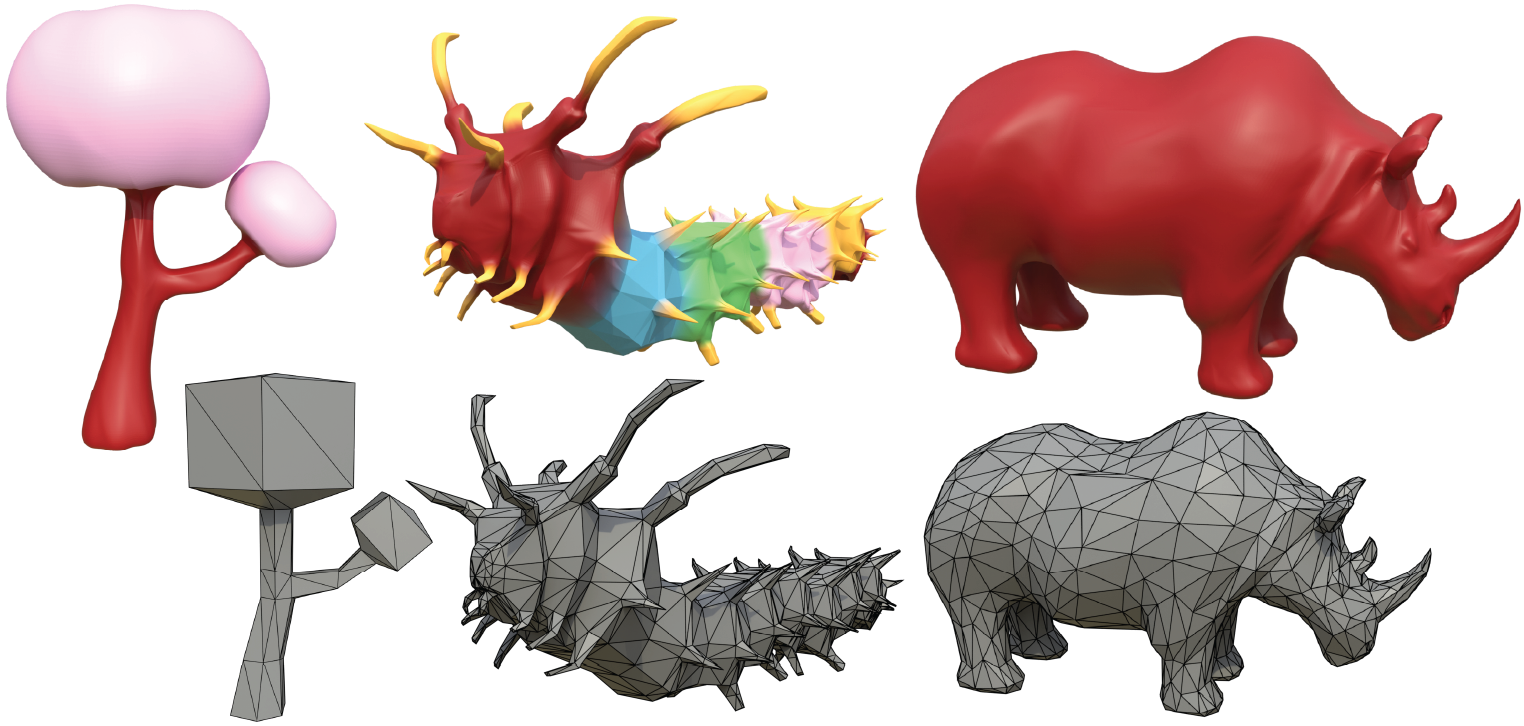

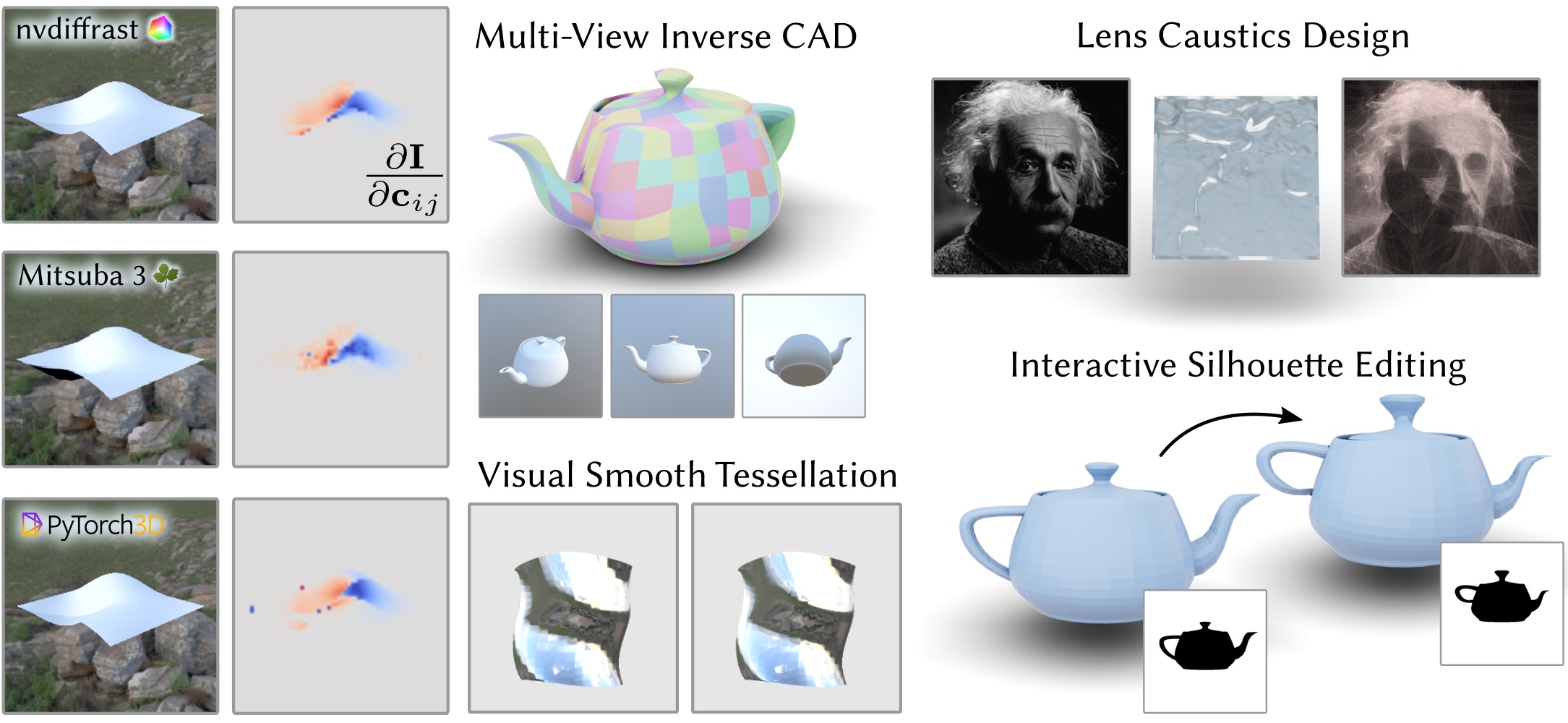

Differentiable Rendering of Parametric Geometry (2023)

ACM Transactions on Graphics (Proc. of SIGGRAPH Asia)

Differentiable Rendering of Parametric Geometry (2023)

ACM Transactions on Graphics (Proc. of SIGGRAPH Asia)

@article{Worchel:2023:DRPG,

author = {Worchel, Markus and Alexa, Marc},

title = {Differentiable Rendering of Parametric Geometry},

year = {2023},

issue_date = {December 2023},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {42},

number = {6},

issn = {0730-0301},

url = {https://doi.org/10.1145/3618387},

doi = {10.1145/3618387},

abstract = {We propose an efficient method for differentiable rendering of parametric surfaces and curves, which enables their use in inverse graphics problems. Our central observation is that a representative triangle mesh can be extracted from a continuous parametric object in a differentiable and efficient way. We derive differentiable meshing operators for surfaces and curves that provide varying levels of approximation granularity. With triangle mesh approximations, we can readily leverage existing machinery for differentiable mesh rendering to handle parametric geometry. Naively combining differentiable tessellation with inverse graphics settings lacks robustness and is prone to reaching undesirable local minima. To this end, we draw a connection between our setting and the optimization of triangle meshes in inverse graphics and present a set of optimization techniques, including regularizations and coarse-to-fine schemes. We show the viability and efficiency of our method in a set of image-based computer-aided design applications.},

journal = {ACM Trans. Graph.},

month = {dec},

articleno = {232},

numpages = {18},

keywords = {differentiable rendering, geometry reconstruction}

}

Differentiable Shadow Mapping for Efficient Inverse Graphics (2023)

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

Differentiable Shadow Mapping for Efficient Inverse Graphics (2023)

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

@inproceedings{worchel:2023:diff_shadow,

title = {Differentiable Shadow Mapping for Efficient Inverse Graphics},

author = {Markus Worchel and Marc Alexa},

url = {https://openaccess.thecvf.com/content/CVPR2023/html/Worchel_Differentiable_Shadow_Mapping_for_Efficient_Inverse_Graphics_CVPR_2023_paper.html, CVF Open Access Version

https://mworchel.github.io/differentiable-shadow-mapping/, Project Page

https://github.com/mworchel/differentiable-shadow-mapping, Code},

year = {2023},

date = {2023-06-01},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

pages = {142-153},

abstract = {We show how shadows can be efficiently generated in differentiable rendering of triangle meshes. Our central observation is that pre-filtered shadow mapping, a technique for approximating shadows based on rendering from the perspective of a light, can be combined with existing differentiable rasterizers to yield differentiable visibility information. We demonstrate at several inverse graphics problems that differentiable shadow maps are orders of magnitude faster than differentiable light transport simulation with similar accuracy -- while differentiable rasterization without shadows often fails to converge. },

keywords = {computer graphics, differentiable rendering, machine learning, neural rendering},

pubstate = {published},

tppubtype = {inproceedings}

}

Multi-View Mesh Reconstruction With Neural Deferred Shading (2022)

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

Multi-View Mesh Reconstruction With Neural Deferred Shading (2022)

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

@inproceedings{Worchel:2022:NDS,

title = {Multi-View Mesh Reconstruction With Neural Deferred Shading},

author = {Markus Worchel and Rodrigo Diaz and Weiwen Hu and Oliver Schreer and Ingo Feldmann and Peter Eisert},

url = {https://openaccess.thecvf.com/content/CVPR2022/html/Worchel_Multi-View_Mesh_Reconstruction_With_Neural_Deferred_Shading_CVPR_2022_paper.html, CVF Open Access Version

https://fraunhoferhhi.github.io/neural-deferred-shading/, Project Page

https://github.com/fraunhoferhhi/neural-deferred-shading, Code},

year = {2022},

date = {2022-06-01},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

pages = {6187-6197},

abstract = {We propose an analysis-by-synthesis method for fast multi-view 3D reconstruction of opaque objects with arbitrary materials and illumination. State-of-the-art methods use both neural surface representations and neural rendering. While flexible, neural surface representations are a significant bottleneck in optimization runtime. Instead, we represent surfaces as triangle meshes and build a differentiable rendering pipeline around triangle rasterization and neural shading. The renderer is used in a gradient descent optimization where both a triangle mesh and a neural shader are jointly optimized to reproduce the multi-view images. We evaluate our method on a public 3D reconstruction dataset and show that it can match the reconstruction accuracy of traditional baselines and neural approaches while surpassing them in optimization runtime. Additionally, we investigate the shader and find that it learns an interpretable representation of appearance, enabling applications such as 3D material editing.},

keywords = {3D reconstruction, computer graphics, computer vision, differentiable rendering, machine learning, neural rendering},

pubstate = {published},

tppubtype = {inproceedings}

}

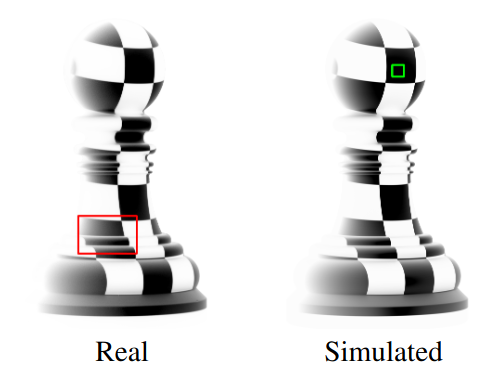

Hardware Design and Accurate Simulation of Structured-Light Scanning for Benchmarking of 3D Reconstruction Algorithms (2021)

Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS 2021)

Hardware Design and Accurate Simulation of Structured-Light Scanning for Benchmarking of 3D Reconstruction Algorithms (2021)

Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS 2021)

@incollection{Koch:2021:HDA,

title = {Hardware Design and Accurate Simulation of Structured-Light Scanning for Benchmarking of 3D Reconstruction Algorithms},

author = {Sebastian Koch and Yurii Piadyk and Markus Worchel and Marc Alexa and Claudio Silva and Denis Zorin and Daniele Panozzo},

url = {https://geometryprocessing.github.io/scanner-sim, Project Page},

year = {2021},

date = {2021-10-10},

booktitle = {Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS 2021)},

issuetitle = {Datasets and Benchmarks Track},

abstract = {Images of a real scene taken with a camera commonly differ from synthetic images of a virtual replica of the same scene, despite advances in light transport simulation and calibration. By explicitly co-developing the Structured-Light Scanning (SLS) hardware and rendering pipeline we are able to achieve negligible per-pixel difference between the real image and the synthesized image on geometrically complex calibration objects with known material properties. This approach provides an ideal test-bed for developing and evaluating data-driven algorithms in the area of 3D reconstruction, as the synthetic data is indistinguishable from real data and can be generated at large scale by simulation. We propose three benchmark challenges using a combination of acquired and synthetic data generated with our system: (1) a denoising benchmark tailored to structured-light scanning, (2) a shape completion benchmark to fill in missing data, and (3) a benchmark for surface reconstruction from dense point clouds. Besides, we provide a large collection of high-resolution scans that allow to use our system and benchmarks without reproduction of the hardware setup on our website},

howpublished = {https://openreview.net/forum?id=bNL5VlTfe3p},

keywords = {computer graphics},

pubstate = {published},

tppubtype = {incollection}

}

Supervised Theses

Gauss Stylization for Implicit Surfaces

11.12.2025

Loch, Tobias

Master

Representing Direction-Dependent Functions in NERFs over Triangulations on the Sphere

22.10.2025

Fleischer, Sandro

Bachelor

Differentiable Reflection Models for Acoustic Path Tracing

11.09.2025

Schmidt, Thilo

Master

Improving The View Synthesis Quality of Partially Trained Radiance Fields Using Depth Image Based Rendering

24.03.2025

Waltermann, Patrick

Bachelor

Representing Radiance Fields in Wavelet Bases

06.12.2024

Pomierski, Paweł

Master

Splatting Spatially Varying Gaussians

03.12.2024

Löhr, Simon

Master

Differentiable Ray Tracing of Bézier Surfaces

21.10.2024

Schmidt, Patrick

Bachelor

Differentiable Indirect Illumination using Visibility-Aware Light Probes

01.08.2024

Bielig, Laura

Master

Context-Aware Neural Subdivision

09.04.2024

Shvo, Ilay

Bachelor

Reconstructing Curves Using The Difference of Max-Affine Functions

03.07.2023

Steffen, Alexander

Bachelor

Learning Control Points of Kappa Curves with Deep Neural Nets

30.06.2023

Algaç, Ufuk

Bachelor